Art + Technology Lab grant recipient John Gerrard came into our program with a proposal to use gaming technology to render a large-scale image in real time. After meeting with advisors John Suh of Hyundai, Brian Mulford of Google, and Bryan Catanzaro of NVIDIA, Gerrard became interested in neural networks.

Artificial neural networks mimic the network of neurons in the brain, but on a smaller scale. In short, they allow computers to learn from data. First a training set of data is introduced, then the computer uses the training set to identify or generate new data based on what it has already “learned.” For example, a training set of 100 cat images can be introduced and “learned” by a machine to help it identify new cat images.

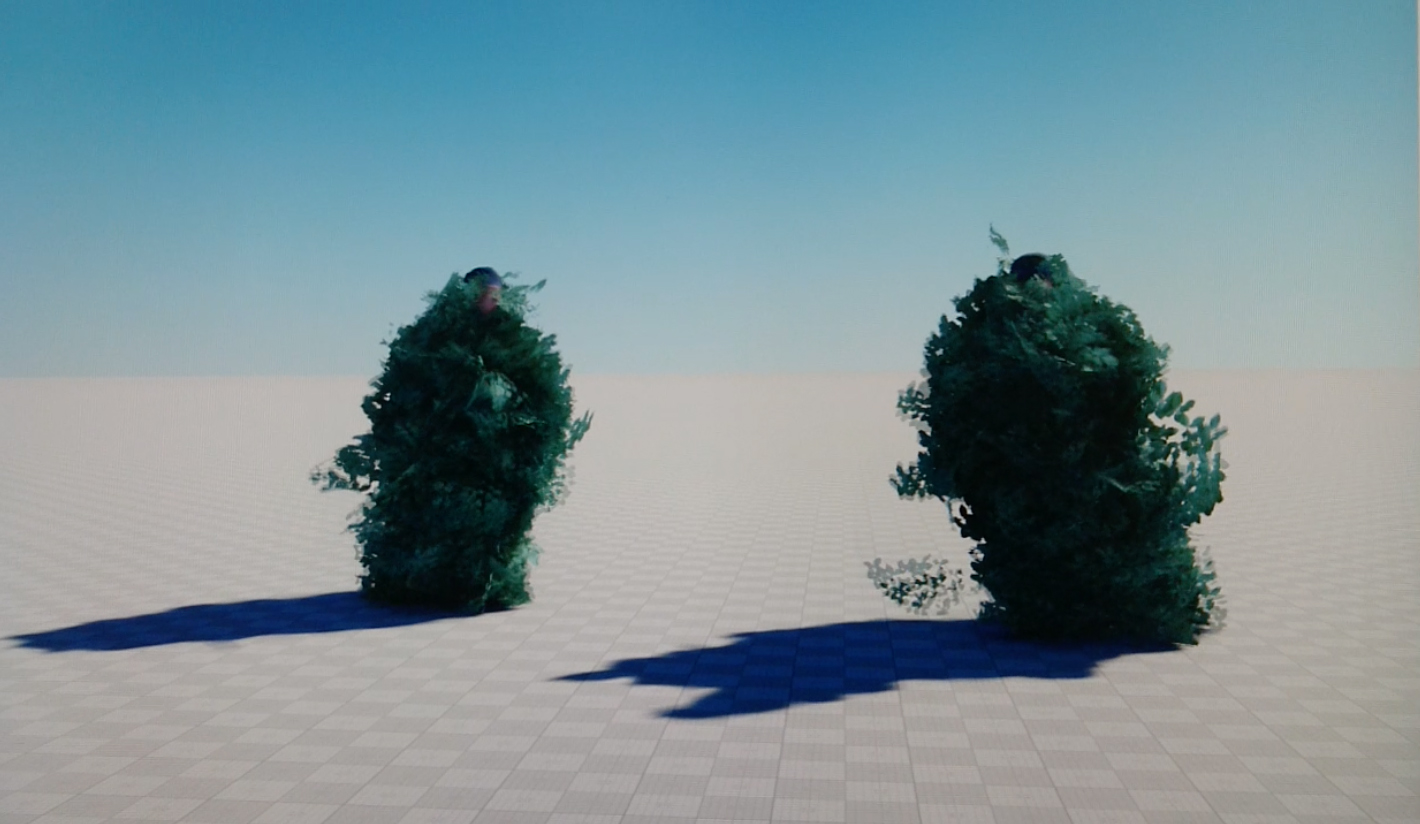

After researching neural networks, Gerrard sought to put this kind of machine learning into practice in his work, “hand-building” a digital model similar to a character found in a battle simulation game and employing the character’s vernacular martial gestures as a training set to produce new gestures. Think of it as a machine-generated militaristic choreography.

The artist’s team is using athletes and a motion-based capture system to introduce “fight moves,” which will comprise a training set that will total around 100 gestures. These gestures will then be interpreted by a computer using Google’s TensorFlow neural network training environment. The computer will then generate new moves based on the training set. Each new move is then fed into the training set, allowing the gestures to further evolve as time goes on.

The performer will be a figure dressed in a leaf-covered “Gillie” suit, a type of camouflage clothing. Gerrard’s team went out into the woods of Vienna to shoot a model dressed in this clothing. The images will be incorporated into the virtual 3D model that will be used in the performance.

Titled Neural Exchange, the work will launch on the evening of November 1, 2017 with a special event that will take place in LACMA’s Bing Theater. The work will then continue on a website. Gerrard is uncertain how the animations will evolve over time. To quote the artist,

“The animations accumulate within the work into perpetuity to unknown end. The original choreographic 'bias' in the original set will be amplified and distorted over time. The only limitations to the work is the storage space of the machine on which the executable file which fulfills the work resides.”

A small publication including interviews by Gerrard of the Lab advisors while doing his research will be handed out during the event.

The Art + Technology Lab is presented by Hyundai.

The Art + Technology Lab is made possible by Accenture, with additional support from Google and SpaceX.

The Lab is part of The Hyundai Project: Art + Technology at LACMA, a joint initiative exploring the convergence of art and technology.